Rice DSP PhD Randall Balestriero (PhD, 2021) has accepted an assistant professor position at Brown University in the Computer Science Department. Since graduating, he served as a postdoc with Yann LeCun at Meta/FAIR and at GQS, Citadel.

Rice DSP PhD Randall Balestriero (PhD, 2021) has accepted an assistant professor position at Brown University in the Computer Science Department. Since graduating, he served as a postdoc with Yann LeCun at Meta/FAIR and at GQS, Citadel.

Rice DSP PhD and Valedictorian  Lorenzo Luzi (PhD, 2024) has accepted an assistant teaching professor position in the Data 2 Knowledge (D2K) Lab and Department of Statistics at Rice University.

Lorenzo Luzi (PhD, 2024) has accepted an assistant teaching professor position in the Data 2 Knowledge (D2K) Lab and Department of Statistics at Rice University.

Two DSP group papers have been accepted by the International Conference on Machine Learning (ICML) 2024 in Vienna, Austria

The U.S. National Science Foundation announced today a strategic investment of $90 million over five years in SafeInsights, a unique national scientific cyberinfrastructure aimed at transforming learning research and STEM education. Funded through the Mid-Scale Research Infrastructure Level-2 program (Mid-scale RI-2), SafeInsights is led by Prof. Richard Baraniuk at OpenStax at Rice University, who will oversee the implementation and launch of this new research infrastructure project of unprecedented scale and scope.

SafeInsights aims to serve as a central hub, facilitating research coordination and leveraging data across a range of major digital learning platforms that currently serve tens of millions of U.S. learners across education levels and science, technology, engineering and mathematics.

With its controlled and intuitive framework, unique privacy-protecting approach and emphasis on the inclusion of students, educators and researchers from diverse backgrounds, SafeInsights will enable extensive, long-term research on the predictors of effective learning, which are key to academic success and persistence.

Links for more information:

Two DSP group papers have been accepted by the International Conference on Learning Representations (ICLR) 2024 in Vienna, Austria

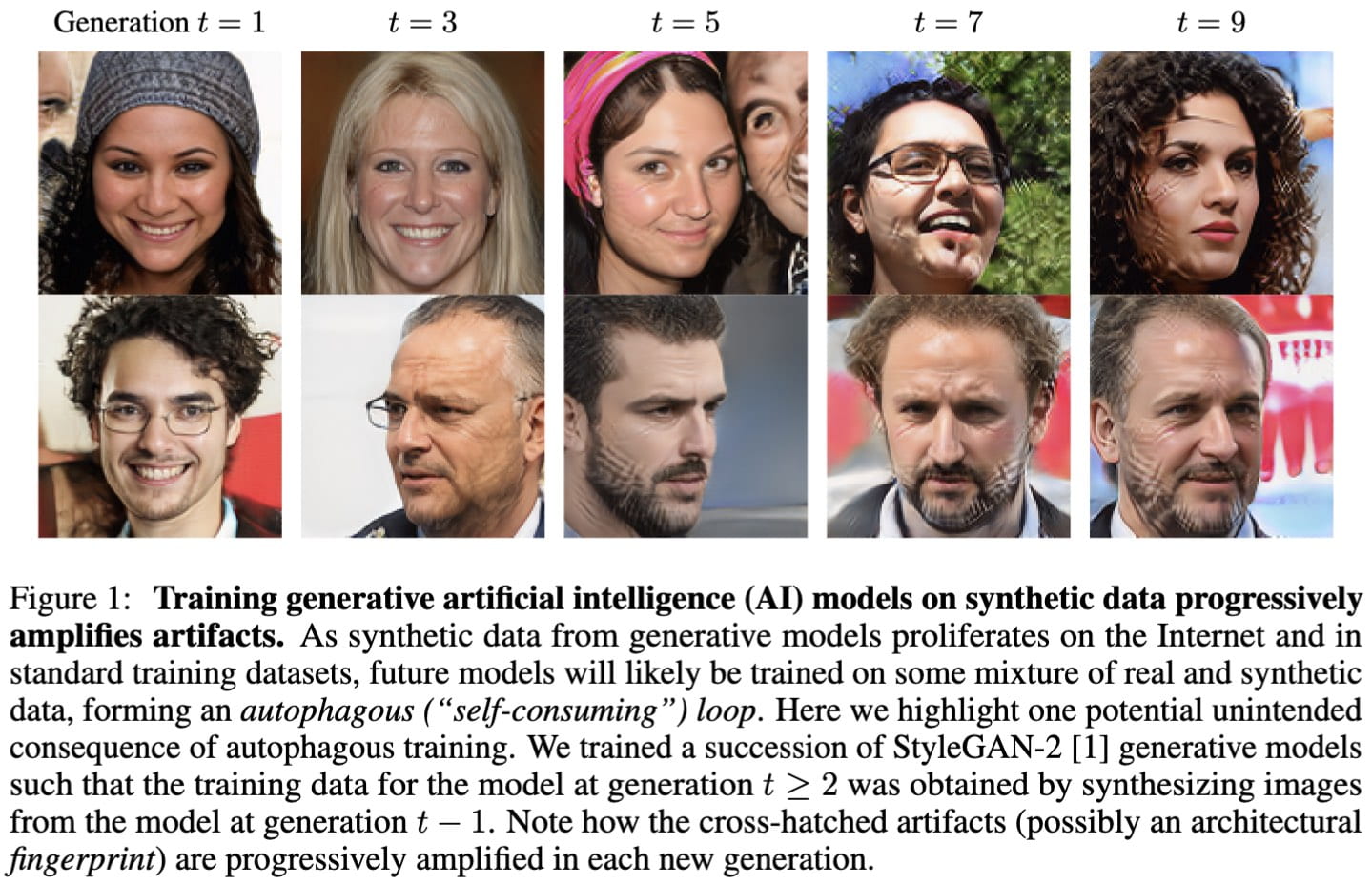

Self-Consuming Generative Models Go MAD

http://arxiv.org/abs/2307.01850

To Appear at ICLR 2024

Sina Alemohammad, Josue Casco-Rodriguez, Lorenzo Luzi, Ahmed Imtiaz Humayun,

Hossein Babaei, Daniel LeJeune, Ali Siahkoohi, Richard G. Baraniuk

Abstract: Seismic advances in generative AI algorithms for imagery, text, and other data types has led to the temptation to use synthetic data to train next-generation models. Repeating this process creates an autophagous ("self-consuming") loop whose properties are poorly understood. We conduct a thorough analytical and empirical analysis using state-of-the-art generative image models of three families of autophagous loops that differ in how fixed or fresh real training data is available through the generations of training and in whether the samples from previous-generation models have been biased to trade off data quality versus diversity. Our primary conclusion across all scenarios is that without enough fresh real data in each generation of an autophagous loop, future generative models are doomed to have their quality (precision) or diversity (recall) progressively decrease. We term this condition Model Autophagy Disorder (MAD), making analogy to mad cow disease.

In the news:

In cartoons:

"Free textbooks and other open educational resources gain popularity," Physics Today 76 (7), 18–21 (2023)

"The prices of college textbooks have skyrocketed: From 2011 to 2018, they went up by 40.6% in the US, according to the Bureau of Labor Statistics’ Consumer Price Index. That can add up to as much as $1000 for a single semester. So it’s no surprise that freely available, openly licensed textbooks, lectures, simulations, problem sets, and more—known collectively as open educational resources (OERs)—are having a moment."

Richard Baraniuk presented the plenary talk "The Local Geometry of Deep Learning" at the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP) in Rhodes, Greece in June 2023.

Two DSP group papers have been accepted by the IEEE / CVF Computer Vision and Pattern Recognition Conference (CVPR) 2023 in Vancouver, Canada